Things I read and liked in April

Many of which you should read also

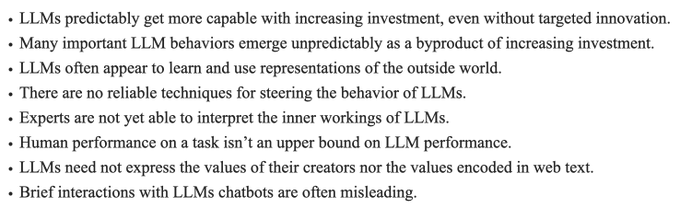

(1) Sam Bowman (NYU, Anthropic) lists “Eight Things to Know About Large Language Models”. Essential reading. Summary thread by Jeffrey Ladish. Here are the Eight Things:

(2) Sasha Chapin and Kathryn Devaney call for more comprehensive scientific study of “awakening” phenomena across various mystical and contemplative traditions.

(3) Some rascal on PhilPapers wrote the following abstract for William James’s 1882 paper in Mind, “Subjective effects of nitrous oxide”:

This is 100% accurate. I recommend the paper, it’s short and it’s a trip.

(4) Check out the new AI Safety Newsletter from the Center for AI Safety (disclaimer: my employer). Here is the latest issue, which covers new calls for AI regulation and competitive pressures among big AI developers like Anthropic, OpenAI, and Google DeepMind (newly formed from the merger of Google Brain and DeepMind).

(5) From the perspective of animal welfare, ‘vegetarian’ vs ‘not vegetarian’ is not a meaningful category; eggs cause a lot more animal suffering than beef. Ozy Brennan has done some rough calculations on the most bang-for-your-buck diet changes you can make to reduce animal suffering: “you can eliminate 95% of the suffering associated with your diet simply by giving up farmed fish, poultry, and eggs”.

(6) The global biomass of wild animals. This surprised me: “Domestic dogs (Canis lupus familiaris) have a total mass of ≈20 Mt, similar to the combined biomass of all wild terrestrial mammals”.

(7) Mackenzie Scott gives $10 million to the Malaria Consortium.

(8) Julian Hazell and I have a fun conversation about AI sentience. Julian’s subtitle:“Rob and I manage to solve all open questions in Philosophy of Mind in a single conversation” 🤔. And I had another fun discussion about AI sentience with John Danaher!

(9) Jacob Buckman argues that "We Aren't Close To Creating A Rapidly Self-Improving AI". A concrete technical argument against an imminent “fast takeoff” i.e. very rapid increase in AI capabilities.

(10) Great meme about one of the most annoying genres of argument:

(11) More AI safety going mainstream: article in the Economist. And here’s UK security minister Tom Tugendhat: “it’s essential that, by the time we reach the development of AGI (artificial general intelligence), we are confident that it can be safely controlled and aligned to our values and interests."

(12) A reliable technique for catching ChatGPT cheating.

(13) On the Filan Cabinet, Daniel Filan has a characteristically smart and in-depth discussion of Mormonism, with an LDS member.

(14) John Salvatier’s “Reality has a surprising amount of detail” is an all-time classic. In a recent post, Stefan Schubert theorizes the psychology of why the amount of detail should tend to surprise us.

(15) Sam Atis gives a simple account of what happened to hipsters. “Isn’t it more likely that the signals of taste and discernment just changed? It’s not cool to write at Vice anymore, it’s cool to have a widely-read cultural critique Substack.”

(16) Great line from Charlie Schaub in this post: “We all have a cross to bear, but I seem to have two: being cursed to live in interesting times, and having nothing interesting to say about them.” But he is underselling himself.

(17) Let's slow down and enjoy a nice “AI summer harvest”.

(18) Schopenhauer wrote a self-help book and he begins by reminding the reader that it's not actually possible to have a happy life, i.e. a life preferable to non-existence:

Wait why would he write the book if he thinks it's wrong? As an illustrative proof that happy lives are impossible, by showing how absurd it would be to obtain one?