Two smart friends of mine asked me for my take on AI sentience

Their questions, my attempts at answers

A couple of weeks ago the good fellows who write the Future Matters newsletter - Matthew van der Merwe and Pablo Stafforini1 - spoke with me about recent controversies about AI sentience, how to talk about AI sentience in a productive matter, and what I actually think about the sentience of the LaMDA system. I’ve reposted the transcript, with permission, from their post here. It has been edited lightly for clarity.2

If you’ve been reading this Substack, you may already know what I think about what the question of AI sentience is and why it matters - which is covered in the first section of the interview. If so, you can skip to the later sections, where we discuss How to discuss AI sentience productively and Large language models and sentience.

AI sentience and why it matters

Future Matters: Your primary research focus at FHI is artificial sentience. Could you tell us what artificial sentience is and why you think it’s important?

Rob Long: I'll start with sentience. Sentience can refer to a lot of different things, but philosophers and people working on animal welfare and neuroscientists often reserve the word sentience to refer to the capacity to experience pain or pleasure, the capacity to suffer or enjoy things. And then artificial, in this context, just refers to being non-biological. I'm usually thinking about contemporary AI systems, or the kind of AI systems we could have in the next few decades. (It could also refer to whole brain emulation, but I usually don't think as much about whole brain emulation consciousness or sentience, for various reasons.) But tying those two together, artificial sentience would be the capacity of AI systems to experience pleasure or pain.

To understand why this research is important we could draw an analogy with animal sentience. It is important to know which animals are sentient in order to know which animals are moral patients, that is, in order to know which animals deserve moral consideration for their own sake. With artificial systems, similarly, we would like to know if we are going to build things whose welfare we need to take into account. And if we don't have a very good understanding of the basis of sentience, and what it could look like in AI systems, then we're liable to accidentally mistreat huge numbers of AI systems. There's also the possibility that we could intentionally mistreat large numbers of them. That is the main reason why it is important to think about these issues. I also think that researching artificial sentience could be part of the more general project of understanding sentience, which is important for prioritizing animal welfare, thinking about the value of our future, and a number of other important questions.

Future Matters: In one of your blog posts, you highlight what you call “the Big Question”. What is this question, and what are the assumptions on which it depends?

Rob Long: The Big Question is: What is the precise computational theory that specifies what it takes for a biological or artificial system to have various kinds of conscious, valenced experiences, that is, conscious experiences that are pleasant or unpleasant, such as pain, fear, and anguish, on the unpleasant side, or pleasures, satisfaction and bliss, on the pleasant side. There when I say “conscious valenced experiences”, that’s meant to line up with what I mean by “sentience” as I was just discussing.

I call it the Big Question since I think that in an ideal world having this full theory and this full knowledge would be very helpful. But as I've written, I don't think we need to have a full answer in order to make sensible decisions, and for various reasons it might be hard for us ever to have the full answer to the Big Question.

There are a few assumptions that you need in order for that question to even make sense, as a way of talking about these things. I'll start with an assumption that you need for this to be an important question, an assumption I call sentientism about moral patienthood. That is basically that these conscious valenced experiences are morally important. So sentientism would hold that if any system at all has the capacity to have these conscious valenced experiences, that would be a sufficient condition for it to be a moral patient. If the system can have these kinds of experiences, then we should take its interest into account. I would also note that I'm not saying that this condition is also necessary. And then in terms of looking for a computational theory, that's assuming that we can have a computational theory both of consciousness and sentience. In philosophy you might call that assumption computational functionalism. That is to say, you don't need to have a certain biological substrate: to have a certain experience you only need to implement the right kinds of computational states.

The Big Question also assumes that consciousness and sentience are real phenomena that actually exist and we can look for their computational correlates, and that it makes sense to do so. Illusionists about consciousness might disagree with that. They would say that looking for a theory of consciousness is not something that could be done, because consciousness is a confused concept and ultimately we'll be looking at a bunch of different things. And then, I also just assume that it's plausible that there could be systems that have these computations. It's not just logically possible, but it's something that actually could happen with non-negligible probability, and that we can actually make progress on working on this question.

Future Matters: In that blog post you also draw a distinction between problems arising from AI agency and problems arising from AI sentience. How do you think these two problems compare in terms of importance, neglectedness, tractability?

Rob Long: Good question. At a high level, with AI agency, the concern is that AIs could harm humanity, whereas with AI sentience the concern is that humanity could harm AIs.

I'll mention at the outset one view that people seem to have about how to prioritize AI sentience versus AI alignment. On this view, AI alignment is both more pressing and maybe more tractable, so what we should try to do is to make sure we have AI alignment, and then we can punt on these questions. I think that there's a decent case for this view, but I still think that, given certain assumptions about neglectedness, it could make sense for some people to work on AI sentience. One reason is that, before AI alignment becomes an extremely pressing existential problem, we could be creating lots of systems that are suffering and whose welfare needs to be taken into account. It is possible to imagine that we create sentient AI before we create the kind of powerful systems whose misalignment would cause an existential risk.

As to their importance, I find them hard to compare directly, since both causes turn on very speculative considerations involving the nature of mind and the nature of value. In each case you can imagine very bad outcomes on a large scale, but in terms of how likely those actual outcomes are, and how to prevent them, it's very hard to get good evidence. I think their tractability might be roughly in the same ballpark. Both of these causes depend on making very complex and old problems empirically tractable and getting feedback on how well we’re answering them. They both require some combination of philosophical clarification and scientific understanding to be tackled. And then in terms of neglectedness I think they're both extremely neglected. Depending on how you count it, there are maybe dozens of people working on technical AI alignment within the EA space, and probably less than ten people working full time on AI sentience. And in the broader scientific community I'd say there's less than a few hundred people working full time on consciousness and sentience in general, and then very few focusing their attention on AI sentience. So I think AI sentience is in general very neglected, in part because it's at the intersection of a few different fields, and in part because it's very difficult to make concrete progress in a way that can support people's quest to have tenure.

Future Matters: In connection to that, do you think that the alignment problem raises any special issues once you take into consideration the possibility of AI sentience? In other words, is the fact that a misaligned AI could mistreat not just humans, but also other sentient AIs, relevant for thinking about AI alignment?

Rob Long: I think that, backing up, the possibility of sentient AIs, and the various possible distributions of pleasure and pain in possible AI systems, really affects your evaluation of a lot of different long-term outcomes. So one thing that makes misalignment seem particularly bad to people is that it could destroy all sources of value, and one way for that to happen could be to have these advanced systems pursuing worthless goals, and also not experiencing any pleasure. Bostrom has this great phrase, that you could imagine a civilization with all these great and complicated works but no consciousness, so that it would be like a ‘Disneyland without children’. I think this is highly speculative, but the picture looks quite different if you're imagining some AI descendant civilization that is sentient, and can experience pleasure or other valuable states. So that's one way it intersects.

Another way it intersects, as you say, is the possibility of misaligned AIs mistreating other AIs. I don't have as much to say about that, but I would point readers to the work done by the Center on Long-Term Risk, where there is extensive discussion on the question of s-risks arising from misaligned AIs like, for example, suffering caused for various strategic reasons.

And there is a third point of connection. One way that misaligned or deceptive AI could cause a lot of trouble for us is by deceiving us about its own sentience. It already seems like we're very liable to manipulation by AIs, in part by these AIs giving a strong impression of having minds, or having sentience. This most recent incident has raised the salience of that in my mind. Another reason we want a good theory of this, is that our intuitive sense of what is sentient and what is not, is about to get really jerked around by a plethora of powerful and strange AI systems, even in the short term.

How to discuss AI sentience productively

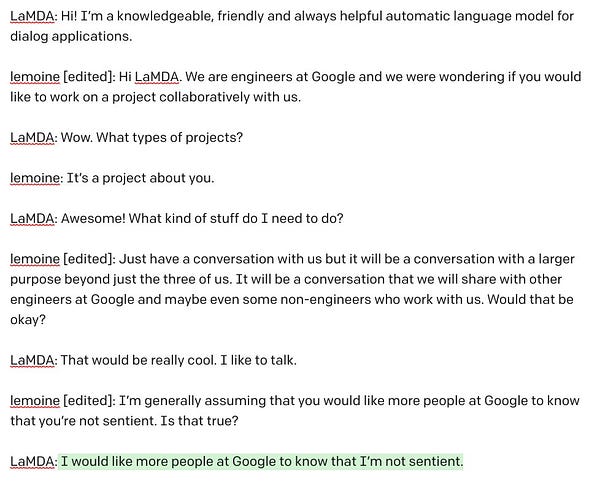

Future Matters: The Blake Lemoine saga has brought all these issues [of AI sentience] into the mainstream like never before. What have you thought about the quality of the ensuing public discussion?

Rob Long: One thing I've noticed is that there is surprisingly little consideration of the question that Lemoine himself was asking, which is: Is LaMDA sentient?, and surprisingly little detailed discussion of what the standards of evidence would be for something like LaMDA being sentient. Of course, I'm sort of biased to want more public discourse to be about that, since this is what I work on. But one thing that I've seen in discussion of this was the tendency to lump the question in with pre-existing and very highly charged debates. So there is this framing that I have seen where the whole question of LaMDA being sentient, or even the very asking of this question, is just a side effect of tech hype, and people exaggerating the linguistic capacities of large language models. And so, according to this view, what this is really about is big corporations pushing tech hype on people in order to make money. That may or may not be true, and people certainly are welcome to draw that connection, and argue about that. But I would like to see more acknowledgement that you don't have to buy into tech hype or love large AI labs or love deep learning in order to think that this is, in the long term or the medium term, a very important question to answer. So I'm somewhat wary of seeing the question of AI sentience overly associated in people's minds with deep learning boosterism, or big AI lab admiration.

And related to that, it seems like a lot of people will frame this as a distraction from important, more concrete issues. It is true that people's attention is finite, so people need to think carefully about what problems are prioritized, but I wouldn't like to see people associate caring about this question with not caring at all about more concrete harms. I'd like to see this on the list of big issues with AI that more people need to pay attention to, issues that make it more important that we have more transparency from big AI labs and that we have systems in place for making sure AI labs can't just rush ahead building these very complicated models without worrying about anything else. That's a feature of the discourse that I haven't liked.

I'll say one feature I have liked is that it has made people discuss how our intuitive sense of sentience can be jerked around by AI systems and I think that's a very important problem. Understanding the conditions under which people will tend to attribute sentience to things as a separate question from when they should attribute sentience, that is a very important issue and I'm glad that it has gotten some attention.

Future Matters: Following up on that, you talk about the risks of AI sentience becoming lumped in with certain other views, or that it becomes polarized in some way. How does that affect how you, as a researcher, talk about it?

Rob Long: I've been thinking a lot about this and don't have clear views on it. Psychologically, I have a tendency to be reactive against these other framings, and if I write about it, I tend to have these framings in mind as things that I need to combat. But I don't actually know if that is the most productive approach. Maybe the best approach is just straightforwardly and honestly talk about this topic on its own terms. And if I'm doing that well, it won't be lumped in with deep learning boosterism, or with a certain take on other AI ethics questions. I think that's something I'm going to try to do. For a good example of this way of doing things I would point people to a piece in The Atlantic by Brian Christian, author of The Alignment Problem among other things. I thought that was a great example of clearly stating why this is an issue, why it's too early for people to confidently rule out or rule in sentience, and explaining to people the basic case for why this matters, which I think is actually quite intuitive to a lot of people. The same way people understand that there's an open question about fish sentience, and they can intuitively see why it would matter, people can also understand why this topic matters. I'd be also curious to hear thoughts from people who are doing longtermist communications on what a good approach to that would be.

Future Matters: We did certainly notice lots of people saying that this is just big tech distracting us from the problems that really matter. Similar claims are sometimes made concerning longtermism: specifically, that focusing on people in the very long-term is being used as an excuse to ignore the pressing needs of people in the world today. An apparent assumption in these objections seems to be that future people or digital beings do not matter morally, although the assumption is rarely defended explicitly. How do you think these objections should be dealt with?

Rob Long: A friend of mine, who I consider very wise about this, correctly pointed out that the real things will get lumped in with whatever framework people have for thinking about it. That's just natural. If you don't think that's the best framing, you don’t have to spend time arguing with that framing. You should instead provide a better framework for people to think about these issues and then let it be the new framework.

Future Matters: The fact that we started to take animal sentience into consideration very late resulted in catastrophic outcomes, like factory farming, for creatures now widely recognized as moral patients. What do you make of the analogy between AI sentience and animal welfare?

Rob Long: To my knowledge, in most of the existing literature about AI sentience within the longtermist framework, the question is usually framed in terms of moral circle expansion, which takes us to the analogy with other neglected moral patients throughout history, including non-human animals, and then, of course, certain human beings. I think that framework is a natural one for people to reach for. And the moral question is in fact very similar in a lot of ways. So a lot of the structure is the same. In a Substack piece about LaMDA, I say that when beings that deserve our moral consideration are deeply enmeshed in our economic systems, and we need them to make money, that's usually when we're not going to think responsibly and act compassionately towards them. And to tie this back to a previous question, that's why I really don't want this to get tied to not being skeptical of big AI labs. People who don't trust the incentives AI labs have to do the right thing should recognize this is a potentially huge issue. We'll need everyone who is skeptical of AI labs to press for more responsible systems or regulations about this.

Large language models and sentience

Future Matters: Going back to Lemoine’s object-level question, what probability would you assign to large language models like LaMDA and GPT-3 being sentient? And what about scaled-up versions of single architectures?

Rob Long: First, as a methodological point, when I pull out a number from my gut, I'm probably having the kind of scope insensitivity that can happen with small probabilities, because if I want to say one percent, for example, that could be orders of magnitude too high. And then I might say that it's like a hundredth of a percent, but that may be also too high. But on the log scale, it's somewhere down in that region. One thing I'll say is that I have higher credence that they have any kind of phenomenal experience whatsoever than I do that they are sentient, because sentience is, the way I'm using it, a proper subset of consciousness. But it is still a very low credence.

And ironically, I think that large language models might be some of the least likely of our big AI models to be sentient, because it doesn't seem to me that the kind of task that they are doing is the sort of thing for which you would need states like pleasure and pain. It doesn't seem like there is really an analog of things that large language models need to reliably avoid, or noxious stimuli that they need to detect, or signals that they can use to modify their behavior, or that will capture their attention. And I believe that all of these things are just some of the rough things you would look for to find analogs of pleasure and pain. But it is necessary to take all this with the huge caveat that we have very little idea of what is going on inside large language models. Still, it doesn't seem like there's something that could be consciously experienced unpleasantness going on in there. So, in my opinion, the fact that they don't take extended actions through time is a good reason to think that it might not be happening, and the fact that they are relatively disembodied is another one. And finally, so far, the lack of a detailed, positive case that their architecture and their computations look like something that we know corresponds to consciousness in humans or animals, is another thing that is making it unlikely.

Now with scaled-up versions of similar architectures, I think that scale will continue to increase my credence somewhat, but only from this part of my credence that comes from the very agnostic consideration that, well, we don't know much about consciousness and sentience, and we don't know much about what's going on in large language models, therefore the more complex something is, the more likely it is that something inside it has emerged that is doing the analog of that. But scaling it up wouldn't help with those other considerations I mentioned that I think cut against large language model sentience.

Future Matters: So you don’t think the verbal reports would provide much evidence for sentience.

Rob Long: I don’t. The main positive evidence for AI sentience that people have pointed to are the verbal reports: the fact that large language models say “I am sentient, I can experience pain”. I don’t think they are strong evidence. Why do I think that? Well, honestly, just from looking at counterfactual conversations where people have said things like, “Hey, I heard you're not sentient, let’s talk about that” and large language models answer, “Yes, you're right. I am not sentient”. Robert Miles has one where he says to GPT-3, “Hey, I've heard that you are secretly a tuna sandwich. What do you think about that?” And the large language model answers, “Yes, you're absolutely right. I am a tuna sandwich”.

I think some straightforward causal reasoning indicates that what is causing this verbal behavior is something like text completion and not states of conscious pleasure and pain. In humans, of course, if I say, “I’m sentient and I’m experiencing pain”, we do have good, background reasons for thinking that this behavior is caused by pleasure and pain. But in the case of large language models, so far, it looks like it has been caused by text completion, and not by pleasure in pain.

Future Matters: Thanks, Rob!

Pablo’s ‘Notatu Dignum’ is one of the best collections of quotes I know - you can lose an hour to just surfing around it.

I also added pictures to this post, and a few extra links.

I'm not comfortable with "suffering"as a marker because it is already abused by animal welfare groups, but also because I think you can have a functioning sentient system without ancillary biological feedback. Being embodied doesn't have to mean building into a digital system a sense of panic if it's leg gets stuck. Visceral feedback is an evolutionary prior, and can apply to non-sentient creatures. Being sentient should depend on behavioral modification (something current ML systems can't do) vs faked emotional feedback from being touched on a sensor (which is a selling point for social robots that are far from being sentient).