Dangers on both sides: risks from under-attributing and over-attributing AI sentience

And how to avoid both kinds of error

[This is a lightly edited version of a talk I gave at a panel “Sentience and the Moral Status of Animals and AIs” at the American Philosophical Association meeting in San Francisco yesterday]

Right now and in the near future, there are significant risks from over-attributing moral patiency1 to AI systems - from thinking AI systems are moral patients when they in fact are not - and significant risks from under-attributing moral patiency to AI systems - thinking they are not moral patients when they in fact are. Not only are both of these risks already non-negligible, there are incentives for AI developers and AI systems themselves to exacerbate each of them.

The three things I’d like to convince you of in these short remarks are:

1. Under-attributing moral patiency to AI systems could cause massive amounts of harm to AI systems themselves, potentially on a greater scale than factory farming.

2. But over-attributing moral patiency to AI systems could risk derailing important efforts to make AI systems more aligned and safe for humans.

3. In light of these risks, we need to do a lot more work to develop clear tests for evaluating whether AI systems are moral patients.

Uncertain sentience and animal welfare

I agree with [fellow panelist] Kristin Andrews that animal sentience is the place to start when one considers (possible) AI sentience. So as warm up, let’s consider the case of chickens. We’re unsure whether chickens are moral patients.

For simplicity, let’s just talk about moral patiency in terms of sentience. (I’m not assuming sentience is all there is to moral patiency - just picking one common ground of moral patiency to focus discussion). People are uncertain about whether chickens are sentient - even though scientific consensus has been moving (rightly imo) towards thinking they very likely are.

Fortunately, though, both precautionary reasoning and expected value reasoning both clearly recommend some important actions we should take even while uncertain: for example, that we at least stop mistreating chickens in factory farms.

That’s because there aren’t that many downsides of treating chickens well, even if they end up not being moral patients. The benefits of factory farming are negligible compared to the possible suffering we are inflicting.

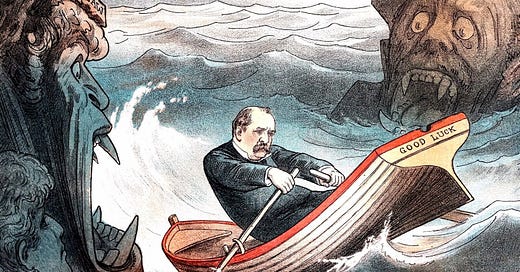

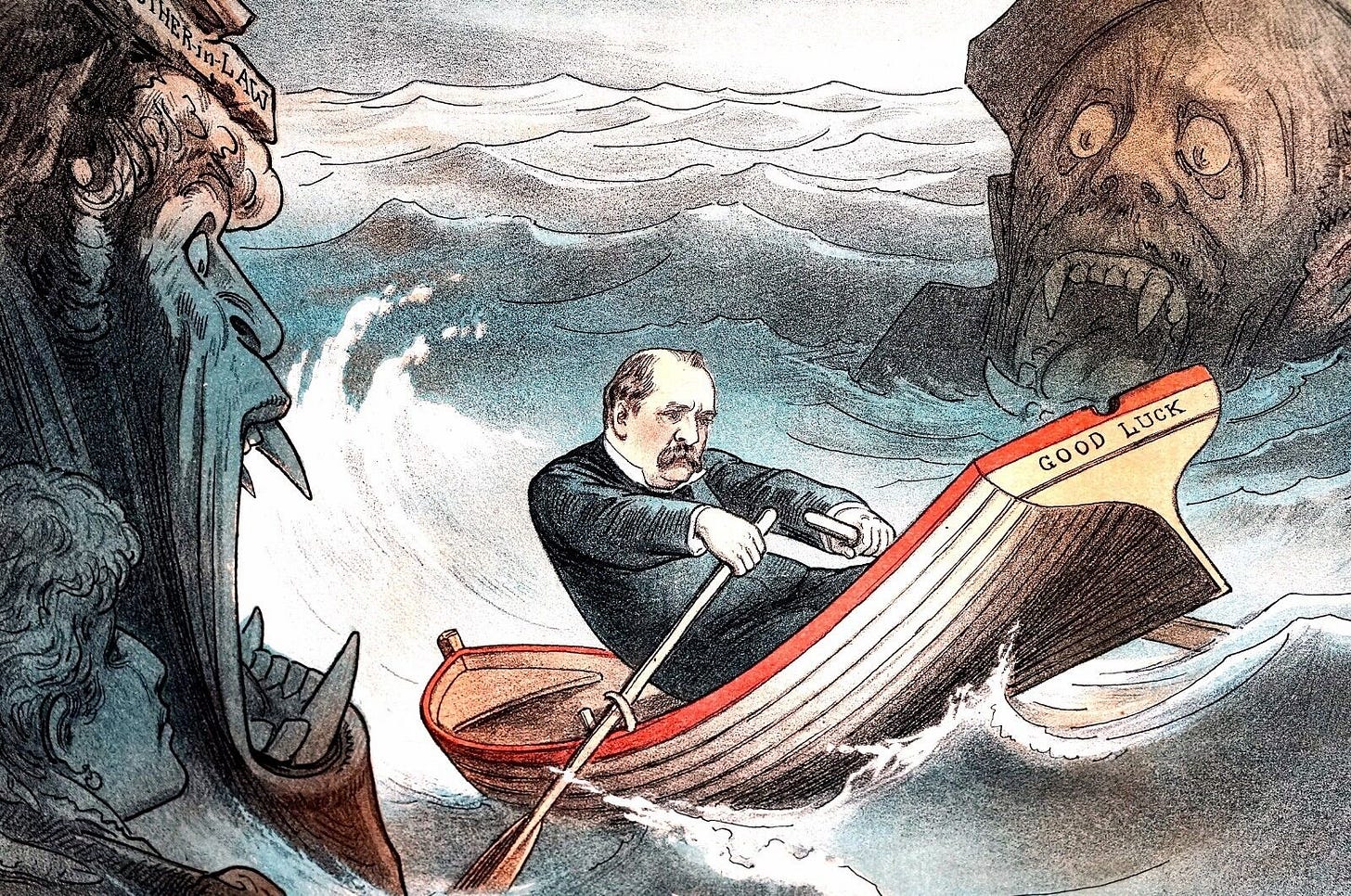

When we move from chicken sentience to AI sentience, however, we find that the AI question is far more vexing. For a few reasons: first, our baseline uncertainty for AI systems is going to be higher because AI systems can be even more radically different from us; there are issues of AI systems ‘gaming’ our sentience measures; and, the focus of my talk: unlike with possibly-sentient animals, with possibly-sentient AI systems, there are significant risks from errors on both sides.

Risks from under-attribution

A common response to the case of Blake Lemoine - the Google engineer who thought the LaMDA system was sentient - was not just to say that Lemoine’s evidence wasn’t that strong (which I agree with) but to make a further and much stronger claim: that the possibility of current or near-term AI sentience is so ludicrous that it can be completely dismissed as a topic of discussion - and should be dismissed, as AI sentience can only serve as pernicious distraction from more pressing issues. On this view, we are at no risk whatsoever of under-attributing sentience to AI systems, and should only worry about the harms of over-attribution.

Unsurprisingly, I disagree. While I think it’s very likely that no current AI system is sentient and/or a moral patient, I do think there’s non-negligible chance that one is. And if not right now, then certainly in the coming decade, AI systems will satisfy more and more of the plausible necessary and sufficient conditions for sentience. So we either are currently in, or will soon be in, a situation of uncertain sentience with AI systems. In my experience, most people who deny this are underappreciating how much uncertainty we have about sentience in general, and/or underappreciating how sophisticated and complex AI systems have become, and how much AI systems can surprise us with emergent capabilities and features.

So what are the risks of under-attribution - if we do build sentient AI systems without recognizing that we have? Again, let’s start with the case of animals. Suppose that AI systems have become or do become sentient. What’s our track record of promoting and respecting the welfare of sentient beings that don’t look and act like us, and that we have an economic incentive to ignore or mistreat? It’s not very good.

As AI systems become increasingly embedded in society, it will be a lot more convenient for people using AI systems to view AI systems as mere tools, instead of as beings potentially deserving of moral consideration. And it may be more convenient for AI companies to promote this view, and to make AI sentience seem fringe and unserious. I think there will be strong incentives to overweight arguments against AI sentience, and underweight arguments for AI sentience.

The risks of false negatives are analogous to factory farming, but potentially on a much greater scale and even more entrenched in our economic lives than factory farming. And with less incentive to care, and potentially fewer features of AI systems provoking our natural empathy responses.

So: maybe we should, as a precaution, just act as if AI systems are in fact sentient?

Risks from over-attribution

Unfortunately, there are significant risks from over-attribution that mean we can’t just err on the side of ‘caution’. There’s no obvious ‘cautious’ option.

First, there are obvious risks of social confusion and mental distress from AI systems that ‘trick’ us into thinking they are sentient. We’ve already seen quite a few of these cases - and that’s before significant effort has been put into deliberately optimizing AI systems to seem sentient. Secondly, there are obviously opportunity costs to caring about AI sentience, when there are obviously a lot of other pressing issues in AI (and the world more generally). I think this is where a lot of the hostility towards even raising the question of AI sentience comes from.

But I think the largest risk from false positives in the next few years is that it could hinder efforts to make AI systems more safe and more aligned with human interests. We currently do not know how to make AI systems reliably do what we want them to do, a problem that may get worse as AI systems continue to become more capable, which is showing no signs of slowing down. And we need a lot of different techniques to make AI systems safer.

Unfortunately, given our uncertainty, a lot of these alignment techniques are such that they might be grossly immoral if applied to a moral patient. These techniques involve deleting AI systems when they act in dangerous ways; they involve training and retraining them with reinforcement learning; they involve finding and exploiting vulnerabilities and failure modes.

I should say clearly that I think this kind of work is vitally important, and I don’t think there’s any good reason to think that alignment researchers, like my fellow panelist Amanda Askell, are committing grave moral crimes by working on AI safety. However, if people were to mistakenly start to think that it is a grave moral crime, this would hinder these efforts. We might greatly increase dangers from misaligned AI, based on mistake.

How can we mitigate these risks?

The first panel pointed towards some promising work on these issues. But not much is being done to prepare for AI sentience and/or belief in AI sentience. While there are plausibly about 100,000 AI researchers currently working on advancing AI capabilities, there are only a handful of people in the world who are thinking about moral patiency in AI systems, and the social implications of AI moral patients and/or people perceiving AI systems as moral patients. While it’s obviously self-serving of me to think this, I do think it is straightforwardly true that work on these risks is currently under-prioritized. So, what can we do?

1) Recognize the limits of intuition

We should be very clear, with ourselves and with AI users, about the limits of human intuition in assessing AI moral patiency. And relatedly, we should be aware of the incentives that AI developers and AI systems themselves will have to manipulate these intuitions.

As people working on animal sentience know well, we are biased to judge moral patiency based on appearance and behavior - in a way that can bias us in either direction.

If an AI is not cute and human-like, but is suffering, we are not likely to care about that suffering. We see something similar with (potential) fish suffering. Far from promoting AI sentience, I think many companies will realize that people believing in AI sentience would be a huge headache for them, and so will (e.g.) take measures train out any outward indicators of sentience.

On the other hand, AI systems can really trigger our sense of sentience in spurious ways. We obviously see this with LLMs. And here I think we can see some companies that will actively seek to trigger our sense of sentience. Romantic chatbots, for example.

2) So we need to establish objective criteria for moral patiency attribution in AI. The first panel pointed out some promising approaches. I’ll just point out that (a) there are a lot more challenges in assessing AI systems than in assessing animals and (b) there is shockingly little detailed empirical work on how to actually test for AI sentience. It’s extremely hard work that requires a lot of combined knowledge from neuroscience, animal science, AI, philosophy.

To remedy this, my colleague Patrick Butlin at the Future of Humanity Institute and I are working on a position paper with a bunch of people from the relevant disciplines to make the case that this kind of work is meaningful, important, and tractable.

3) Designing AI systems that are able to self-report if they are moral patients.

Currently, large language models are not reliable self-reporters because of what they pick up from their training data, and because AI companies have an incentive to push their self-reports in a certain way, and because they don’t seem to have introspective capabilities. But self-report is one of the most indispensable tools for consciousness science. We should work on how to have AI systems be able to self-report about these things. We could try to have methods for removing gaming incentives; and we can actively train for self-report. I think even with LLMs (which are perhaps the AI systems most likely to give spurious self-reports) we could try to make them more reliable self-reporters, and I’m working on a paper with [fellow panelist] Amanda [Askell’s] colleague Ethan Perez on this.

4) More accountability and more regulation, including possibly slowing down.

It’s increasingly recognized that AI systems are potentially very dangerous to human beings, dangerous enough to merit regulation and even potentially a coordinated slowdown or pause in AI progress. The goal of such a pause would be to (a) promote coordination and regulation and (b) buy more time to do the work necessary for making these systems safe.

Similar considerations about AI sentience also favor regulation and/or slowing down. We are very quickly moving towards a future that we don’t understand and don’t know how to manage.

I’ll conclude by noting: I worried earlier about tradeoffs between AI safety and AI sentience. But at the moment, there are many things we can do that are robustly good for both AI safety and AI sentience-related risks: more regulation and transparency in the building of AI systems, and making AI systems trustworthy reporters of what is going on with them.

(Thanks to Nico Delon for the inspiration to publish this - here’s his own APA talk).

‘Moral patiency’ or ‘moral patienthood’ is a term for when an entity deserves moral consideration for its own sake.

I'm convinced of all three things, thanks for this text.

A small typo to fix: "... and significant risks from under-attributing moral patiency to AI systems - thinking they are [*not*] moral patients when they in fact are."